A self-hosted, intelligent news ecosystem built to reclaim attention and strengthen digital privacy.

My personal media consumption needed more than a generic aggregator. It needed a coherent ecosystem that filtered noise, meant strictly for local deployment, and transformed information overload into actionable insights.

I architected a full-stack local environment end to end. This included a resource-efficient AI analysis pipeline, a minimalist mobile-first UI, and a granular quality control system. The result is a unified platform that works seamlessly for daily reading, designed to support mindful consumption while running entirely on local hardware.

Content Engine

Hardware-Aware AI Pipeline

To make running local LLMs viable on standard hardware, I engineered a “Fail-Fast” decision pipeline that minimizes GPU overhead. Instead of processing every article fully, the system strictly enforces a tiered analysis order:

Language Check: Immediate discard of non-target languages before scraping.

Scoring Phase (Fast): The AI first reads the raw content to assign a Quality Score and Clickbait Probability. This is a rapid, low-cost operation.

Filtering Gate: If an article falls below the defined Quality Threshold or exceeds the Clickbait Threshold, processing stops immediately.

Rewrite Phase (Intensive): Only the high-quality survivors are passed to the expensive rewriting model to generate summaries and fix headlines.

This approach reduced GPU runtime by approximately 60%, allowing the system to run in the background without impacting daily workstation performance.

Intelligent Transformation

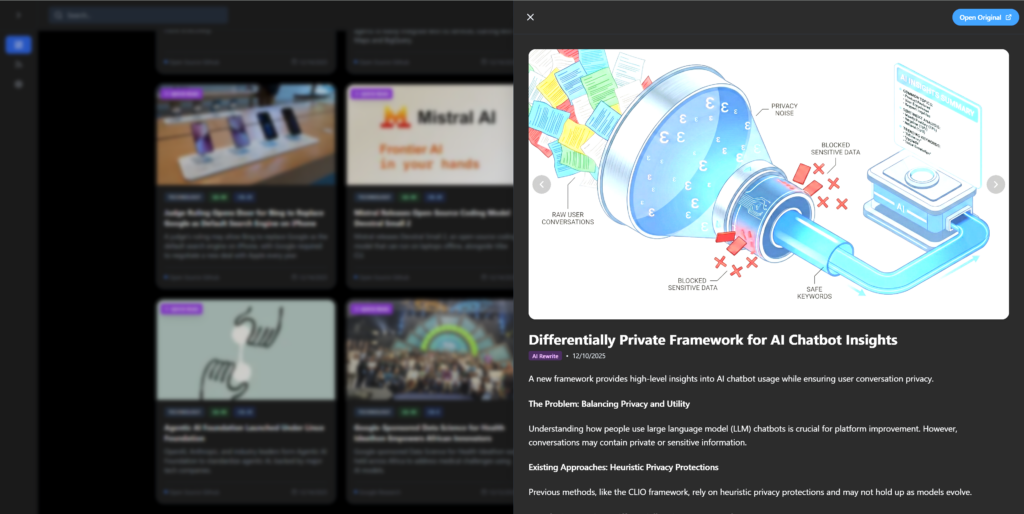

Headline Rewrite & Summary

Beyond simple filtering, the system actively intervenes to reduce cognitive load. I implemented an **AI Headline Rewrite** module that treats the original title as unreliable user input.

Purpose: To de-sensationalize news and neutralize emotional triggers (e.g., changing “You Won’t Believe What X Did” to “X Announces New Policy”).

Implementation: The LLM analyzes the full article body to extract the core factual event, then generates a purely descriptive title.

Quality Control

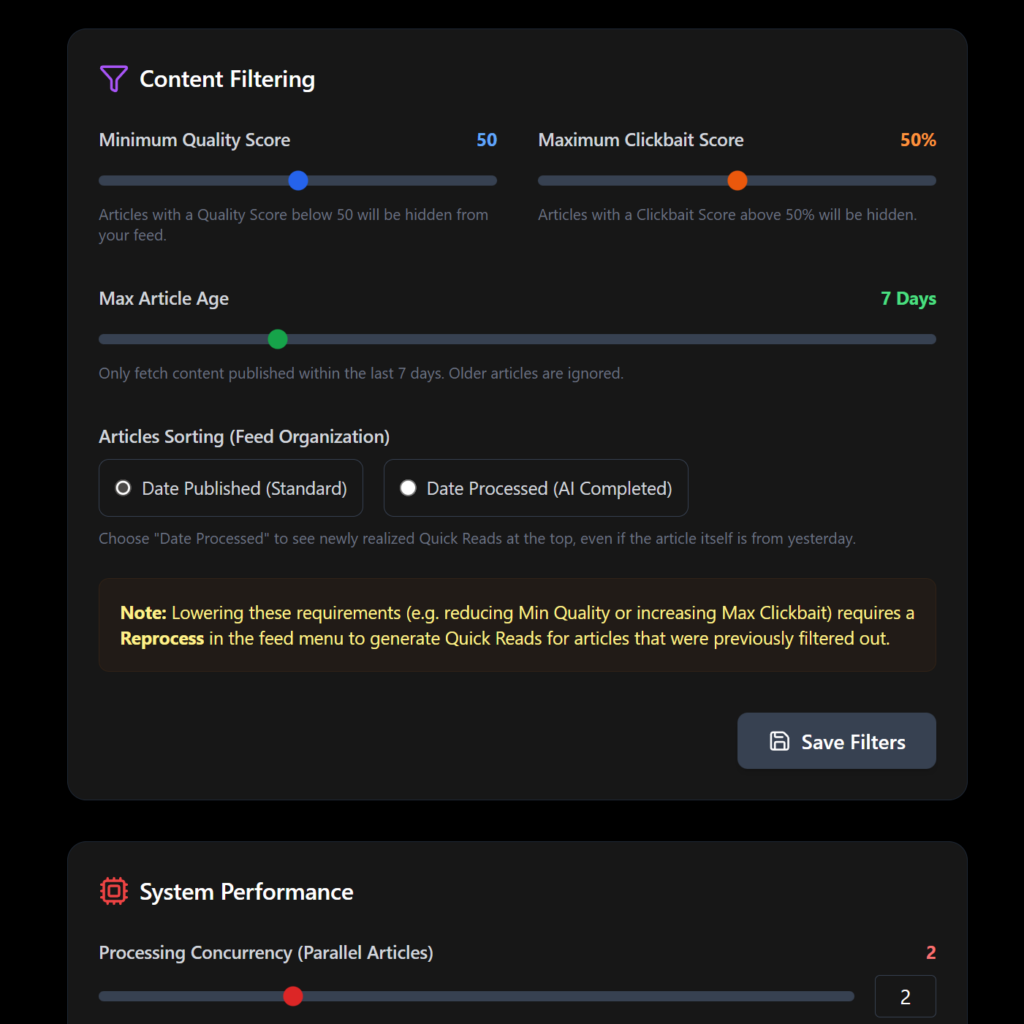

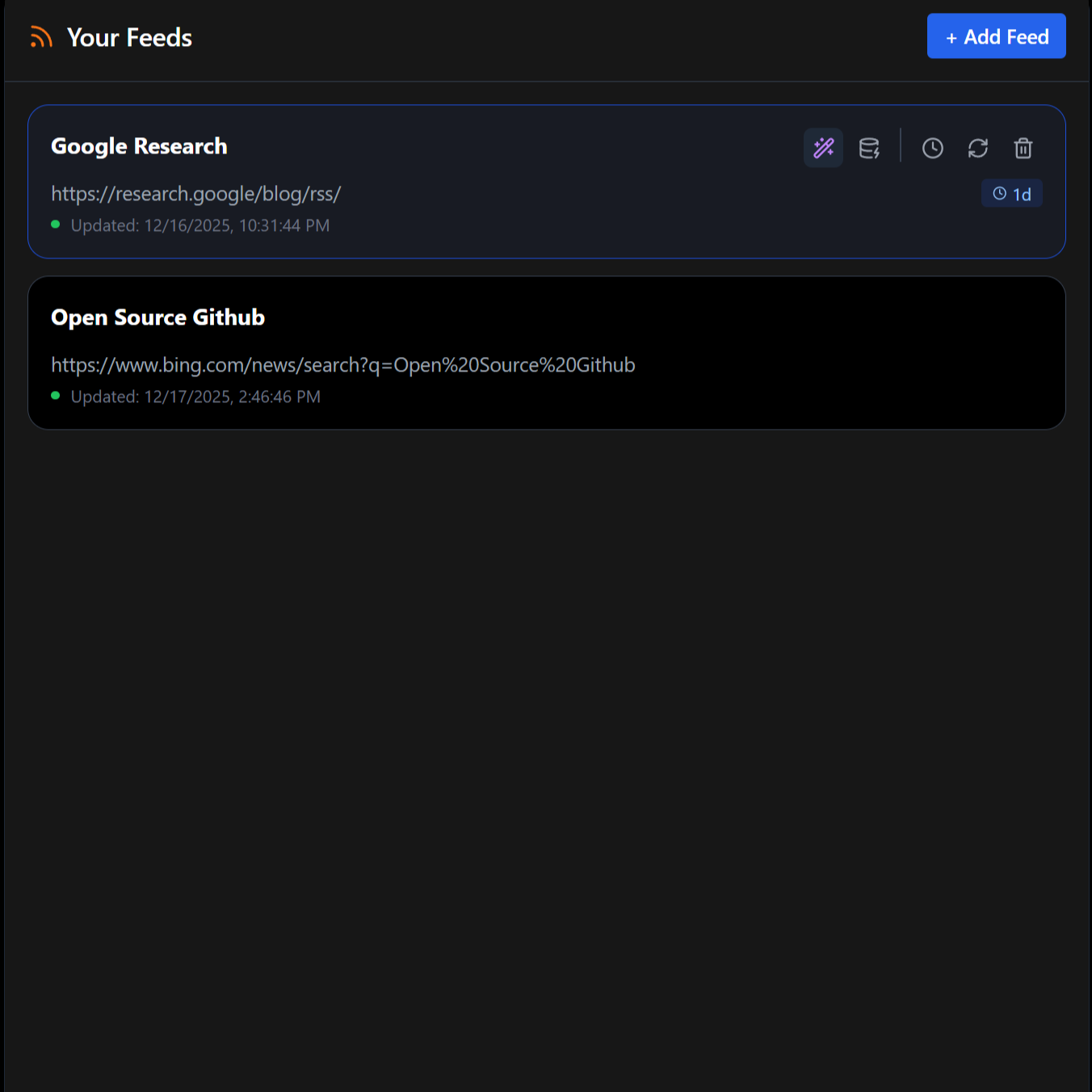

Granular Filtering Configuration

The system moves beyond simple keyword blocking, relying instead on semantic understanding to curate the feed. I integrated specific, user-tunable controls to define “quality”:

Minimum Quality Score (0-100): A distinct threshold that filters content based on depth, neutrality, and factual density.

Maximum Clickbait Tolerance (0-100%): A probabilistic filter that hides sensationalist or misleading headlines.

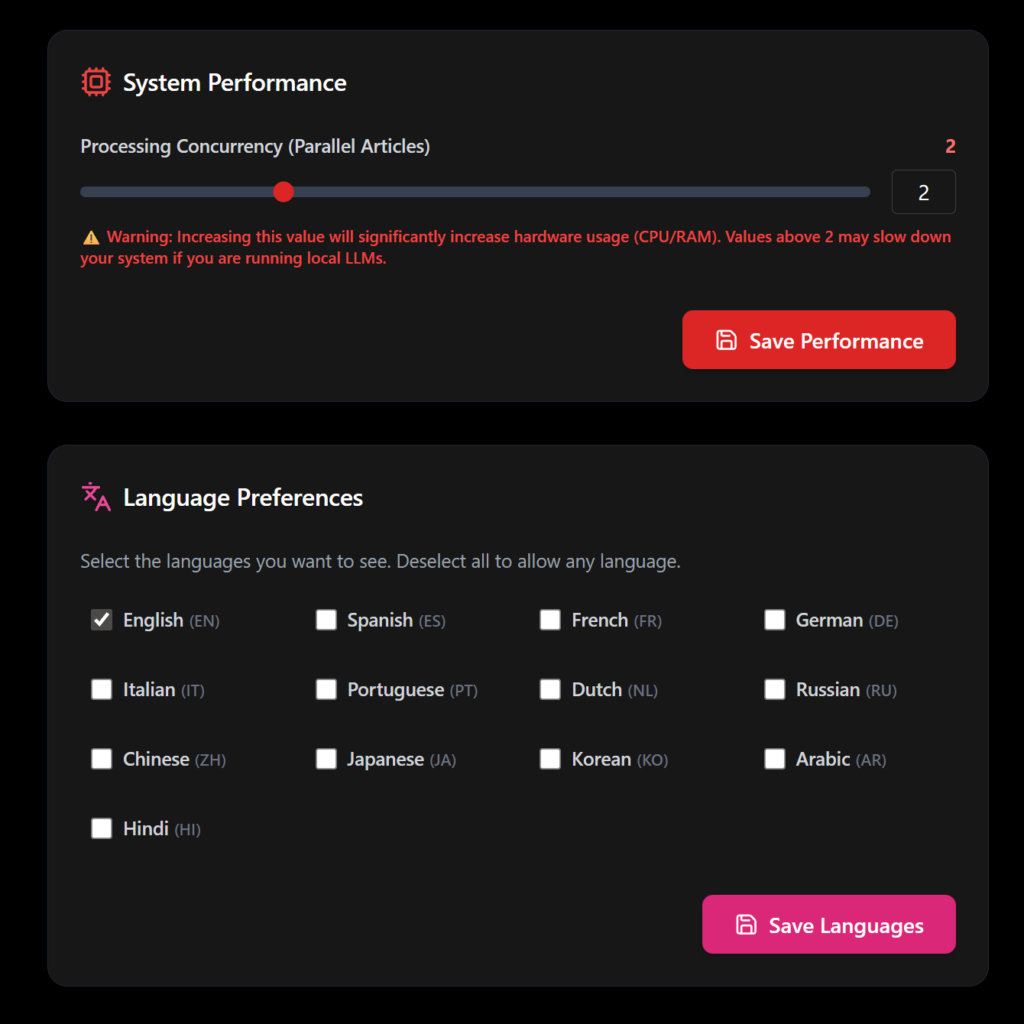

Processing Concurrency: Adjustable thread control (1-5 concurrent articles) to balance speed against system heat/fan noise.

Max Article Age: Automated pruning of stale content to ensure relevance.

Diff-Based Change Detection: Custom logic for static sites that only triggers a new feed item when significant HTML changes occur, ignoring footer/layout updates.

Resilience & Failsafes

Recognizing that local AI models can be non-deterministic, I put the user in ultimate control of the data. The system includes manual overrides to recover from model failures:

Force Reprocessing: A dedicated control to invalidate existing analysis and force the AI to re-read and re-score any feed, correcting potential “bad rolls” or hallucinations.

Feed Purge: A destructive failsafe to instantly wipe all cached articles and metadata for a specific source. This ensures that if a feed becomes corrupted or the AI repeatedly misinterprets it, the database can be reset to a clean state without affecting the rest of the system.

Digital Platform

Cleanliness & Mobile-First Design

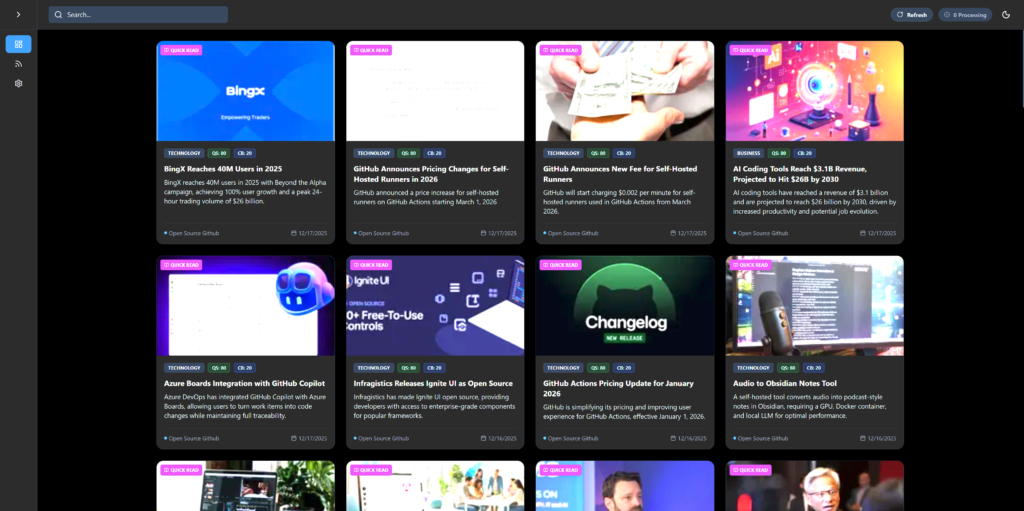

The interface was stripped down to the essentials to promote focus. I rebuilt the frontend with a strict adherence to usability standards:

Mobile-First Architecture: Large touch targets and bottom-weighted navigation for one-handed use.

Quickread Panel: A dedicated slide-over interface that presents the AI-generated high-density summary. This allows users to consume the “Core Facts” of a story instantly without navigating away to the ad-heavy source URL.

Distraction-Free Reading: A clean, uniform card layout that normalizes content from disparate sources.

Performance: Sub-second load times and efficient DOM management to ensure the app feels like a native tool rather than a web page.